💡 Beyond the Basic Bot: An AI with a Memory

Most AI chatbots are forgetful. They might recall the last few messages in a conversation, but once the chat window closes, their “mind” is wiped clean. This makes for frustrating interactions, forcing you to repeat context and information constantly.

But what if your AI could genuinely remember you? What if it could store important facts, personal preferences, or user-provided notes and instructions, and recall them days, weeks, or months later?

This post breaks down the powerful workflow for building just such a system: an AI Agent Chatbot integrated with Telegram, featuring true long-term memory and note storage powered by the Google Gemini Chat Model.

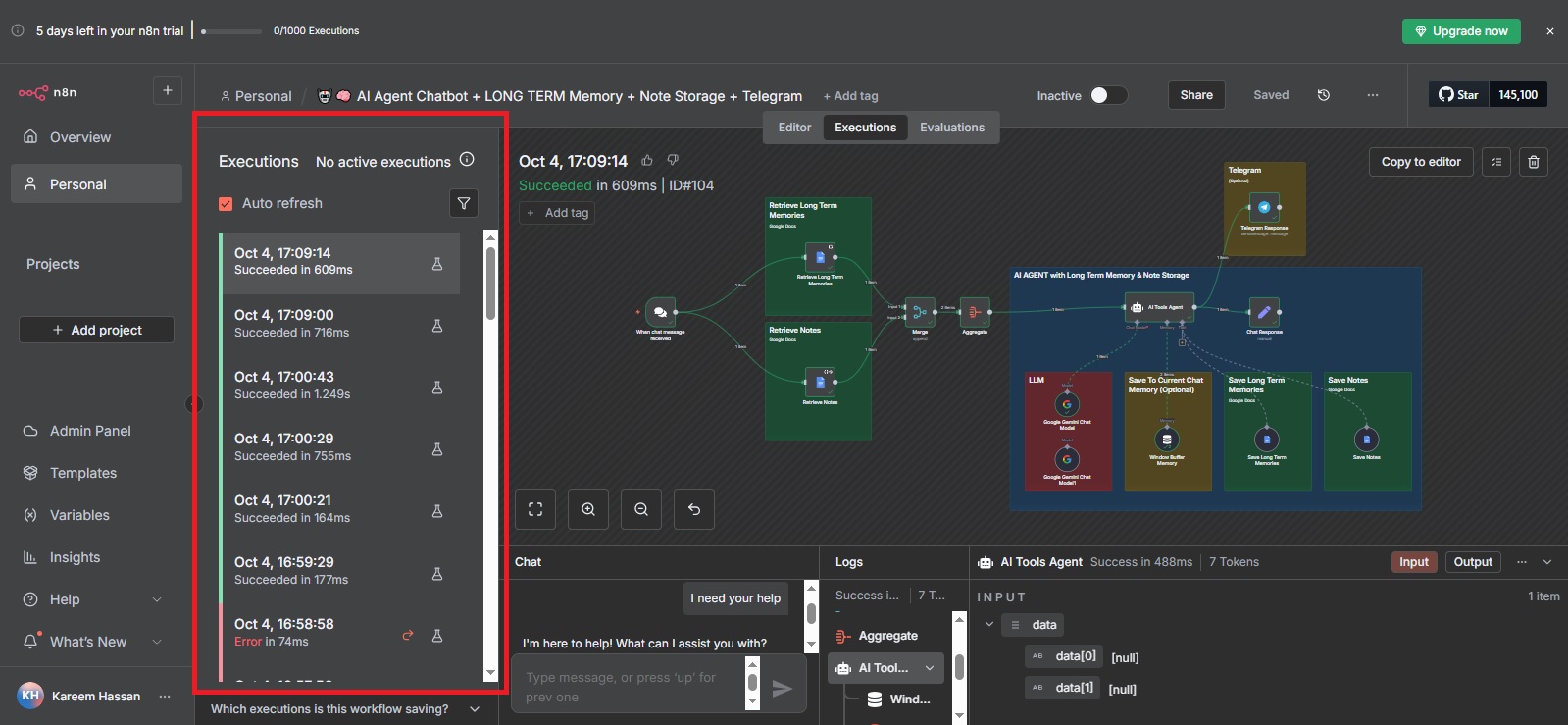

🏗️ The Architecture: How the AI Agent Thinks

The core of this advanced system is a sophisticated workflow that manages context, memory, and data retrieval. It turns a simple request-response loop into a comprehensive, stateful interaction.

1. The Trigger: A Simple Message

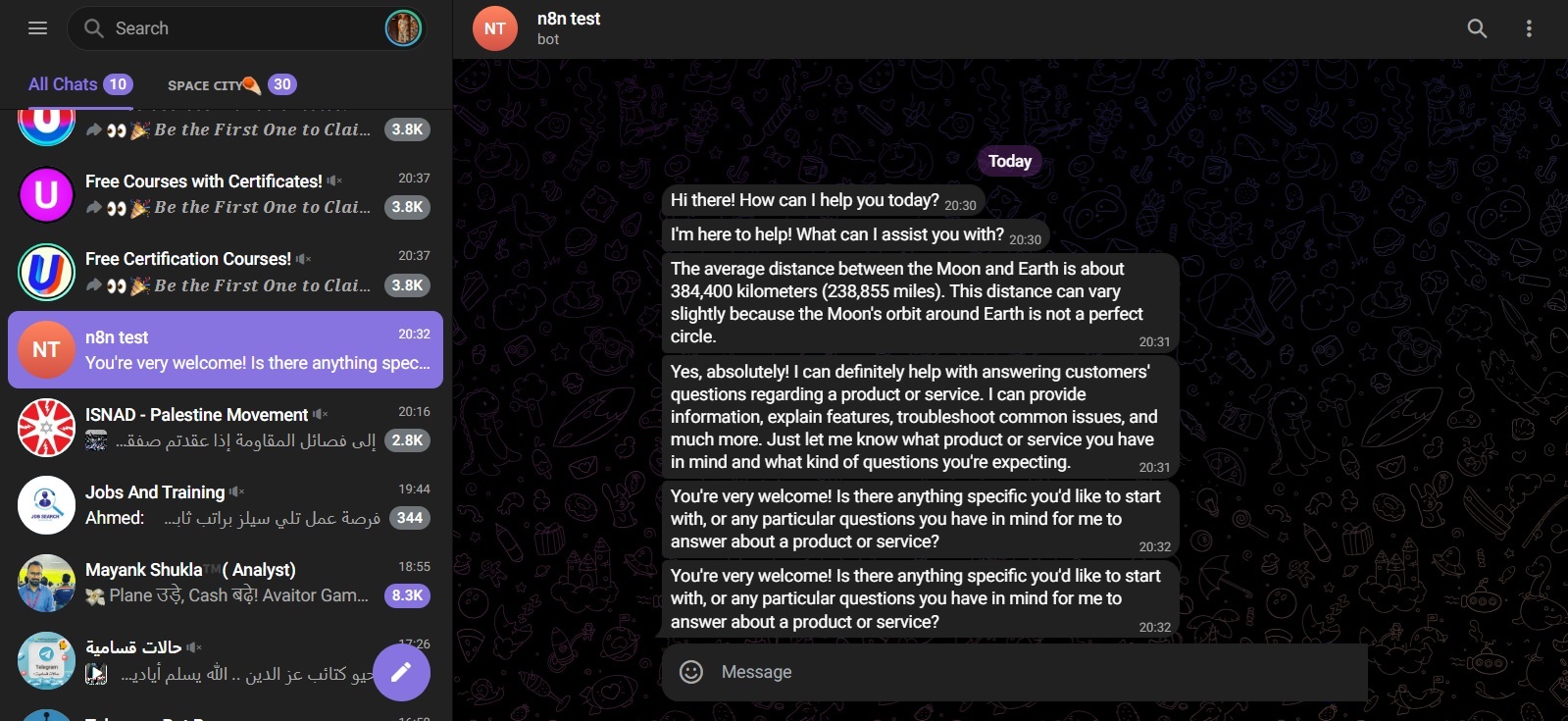

The journey begins with a familiar prompt: a user sends a message on Telegram.

- 🟢 Trigger: “When chat message received”

- This is the starting point. The new message is immediately captured to begin processing.

2. Contextual Retrieval: The Secret to Long-Term Memory

Before the AI generates any response, it first gathers all the necessary background information. This is where the magic of “memory” happens.

- 🟩 Retrieve Long Term Memories: This node accesses a store (like a Google Doc or a dedicated database) where the AI has previously saved important insights or facts from old conversations. This is essential for maintaining context across different sessions.

- 🟩 Retrieve Notes: This retrieves user-specific data—things the user explicitly told the AI to store, like a list, a command, a user profile, or specific instructions for future interactions.

3. The Context Fusion: Combining All the Information

The raw user message, the retrieved long-term memories, and the stored notes are all separate pieces of data. They need to be combined into one coherent package before being sent to the LLM.

⚙️ Merge + Aggregate: This node acts as the integrator. It cleanly structures the current user input with all the historical and user-stored context. The final, aggregated text serves as the rich, complete prompt for the AI model.

4. Sending the Response

- Chat Response: This node finalizes the answer generated by the Gemini model.

- 💬 Telegram (Optional Output): The beautifully crafted, context-aware reply is then instantly sent back to the user on the Telegram platform, completing the loop.

The Key Components

LLM (Google Gemini Chat Model)

This is the brain. It takes the merged context and user query, processes it with its vast knowledge and reasoning capabilities, and formulates the final reply.

Window Buffer Memory (Save to Current Chat Memory)

This handles the short-term memory. It ensures that within the current, ongoing chat session, the AI remembers what was said in the last few messages, maintaining a fluid conversation flow.

Save Long-Term Memories (Google Docs)

A crucial step! After the Gemini model processes the request, it might extract a new key piece of information (e.g., "The user’s favorite color is blue" or "The user is planning a trip in December"). This node saves that new insight to the long-term memory store for future retrieval.

Save Notes (Google Docs)

If the user's message was an explicit instruction to store data (e.g., "Save this recipe as 'Dinner Idea'"), this node handles storing that raw user-created content.

🔁 Summary: A Conversation with Context

In short, this sophisticated workflow allows the AI to follow this powerful logic:

- Hear: User sends a message on Telegram.

- Recall: System instantly fetches memories and notes relevant to the user.

- Process: The Gemini model generates a smart, tailored reply using all available context.

- Learn: New insights from the interaction are saved for future conversations.

Reply: The answer is delivered back to the user.

By layering retrieval and storage nodes around a powerful LLM like the Google Gemini Chat Model, you transform a fleeting chatbot interaction into a personal, context-rich, and genuinely useful AI assistant. It’s time to build an AI that doesn’t just chat—it remembers and learns.

🔗 Discover More

The kind of infrastructure expertise required to design, deploy, and scale next-generation AI solutions is rare. Professionals who can bridge the gap between AI development and secure, performant system architecture are essential for moving from concept to production.

Explore Kareem Hassan’s full professional profile on Ka.nz to see how his infrastructure expertise powers next-generation AI solutions.

I am Aya Al Dorzi, a passionate community manager and project management graduate with a strong interest in marketing, digital strategy, and online business growth. I earned my bachelor’s degree in Operations Project Management and have since developed hands-on experience in content creation, community building, and digital marketing.

I currently work as a part-time blogger at Kanz.com, where I create engaging and informative content that connects with diverse audiences. Alongside this, I manage my own YouTube automation and e-commerce brand, focusing on developing creative strategies that drive growth and brand identity. I’m dedicated to continuous learning and aspire to expand my expertise in management, leadership, and digital entrepreneurship.